Hello everyone! Chris here with a LARGE update on the status of our ball detection method. I have been working on getting an accurate and fast ball detection method to detect our silver ball as it moves across the labyrinth board.

Now, had our ball been some other color that is bright and easily distinguishable( such as red) we could have used the HSV colorspace to easily detect the ball by converting out camera images from RGB to HSV, and then thresholding the H-channel for red hues. In the HSV colorspace, colors are represented by a Hue(color), Saturation(color intensity/amount of greyness), and Value(brightness). Below is a visual for HSV from Wikipedia.

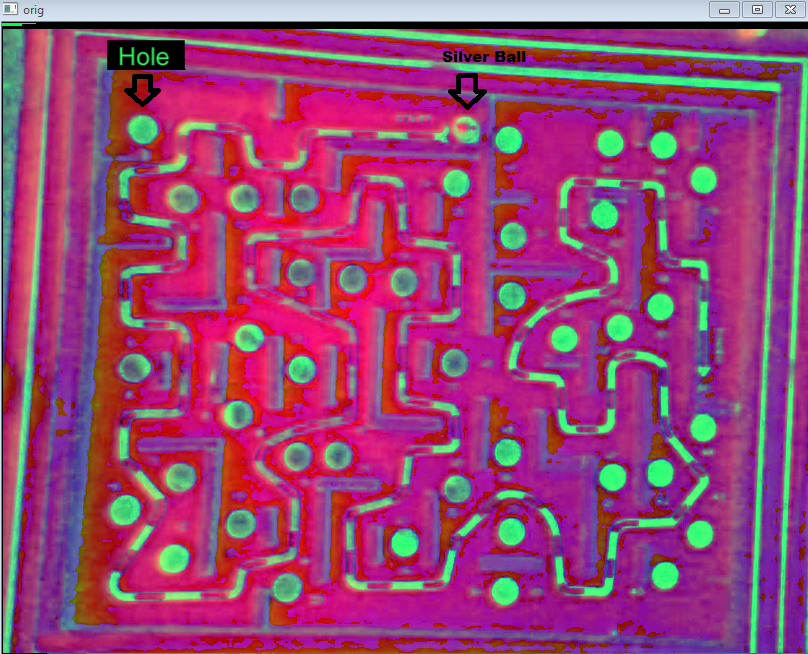

Currrently if we attempt to use HSV, the silver ball is really difficult to distinguish from the black holes on the game board as seen by the picture below.

Alternatively we could use a colored ball, but we are opting to avoid using HSV for ball detection since it makes the problem too easy. For more of a challenge, we will keep the ball in the RGB color space and attempt to detect the ball.

Niraj started working with template matching and created a great picture of our ball to be used as a template.

Template matching is a brute force algorithm, where you basically slide the template across the entire image until you find a ‘close enough’ match. If you have a large image, this can take a long time to find the best match.

Luckily for us, the OpenCV libraries include a cool feature called Region of Interest (ROI). This handy feature allows us to designate a sub-region within an image to work with. This way, we can reduce our search space from say 640×480 down to 80×80. This yields a great increase in speed while performing the algorithm. If you designate an ROI, almost every OpenCV function will only work on that region that you specify. It is great!

For our ball detector, we wanted to have the ROI follow the ball as it moves through the maze. However, when you use ROI, the coordinates of the sub-image are different than the original image (i.e. Ball’s center was at (100,100) in original image, but when you use ROI the ball’s center in the ROI is (20,20)). That really threw Niraj, Mike, and me off since the ROI would no longer follow the ball.

I was confused and decided to look into it further. I asked Patrick for help and he suggested that this could be a vector algebra problem. I decided to draw my own picture of the problem with vectors and was soon able to get the correct values to update my ROI from one frame to another. It was pretty exciting to see the ROI follow our ball and keep it centered. Definitely a confidence booster!

The ball detector was working great, but there were some problems. When the ball reached fast speeds, the motion blurred the ball in the image, resulting in the template matching to fail. To make matters worse, it turns out that in our test video, the left side of the board was significantly darker than the middle which caused our template to not match up entirely.

I decided to do some research on Google for possible solutions, and came across a technical paper called “Face Tracking Using Motion-Guided Dynamic Template Matching” by Liang Wang, Tieniu Tan, and Weiming Hu. In this paper, the authors described an effective way to use template matching to detect faces. There were some important ideas that came from this paper.

First, templates(such as faces) are not static and usually change from frame to frame. They should not change extremely but definitely do not remain the same throughout a video. Therefore it is important to update our template as the video runs. We can do this by using a simple IIR filter. The filter the paper uses is , NewTemplate = PreviousTemplate*alpha + bestNewTemplateMatch*(1-alpha). In my program, I set alpha to .85 and use the OpenCV function addWeighted() to make the updates.

Second, there needs to be a confidence measure to make sure that we are not attempting to add garbage or incorrect data to our template. For example, if our template matcher chooses a hole as a match to our template, we definitely need to tell it that it is wrong. My confidence measure is the average of all the pixels in each channel of our RGB ball template. I use absolute error to compare our template with the new match. Importantly, I decided to only average the elements closest to the center of the templates since this prevents the brightness of the background to cause problems in calculating absolute error.

Lastly, we need a backup plan in case we cannot find a template match within our threshold. I opted to increase my ROI range and to continue to use the previous template until the ball was located again. It would be great if we had a predictor to tell us where the ball could be if we ever lose track of it, but for now this looks like it is working quite nicely. I am proud of the results and it was great using techniques found in a technical paper.

Below is a video showing my results. The two images of our ball show the current match for our ball and the current template that we have been updating. Note that when the red box is shown we are detecting the ball. If the red box disappears, that means that our template matching method is having trouble finding an accurate match. This video shows the most difficult part of our tracking issues, when the ball is moving fast. When the ball is not moving fast, it is very easy to track. I opted to use the Normalized Correlation coefficient matching method since it provides great results and is better adapted at distinguishing between lighting effects.

http://www.youtube.com/watch?v=eAP24qYz7sk

We still have many things to get done, but we are on the right track. Wish us luck!

~Chris